Improve C# App Performance by Eliminating Nested Loop Lookups

Data lookups can often cause a common performance problem to be introduced into code. In this post, we’ll look at an example of that problem and one possible solution.

For this example, we’ll be using a C# console application that reads from the country table of the MySql sakila sample database. However, the refactoring solution presented is language and database agnostic.

This solution is only meant to demonstrate a common problem with nested loops. Normally, when we have associations with foreign keys like we have in the sakila database, we could just run a join on the data and be done with it. However, I’ve specifically set this example up to use a nested loop that runs a subquery, just to illustrate the point.

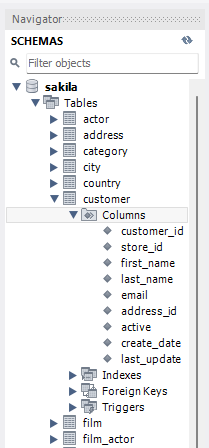

The MySql sakila database customers table schema is shown in the MySQL Workbench screenshot below:

The application’s Main method, shown below, opens a connection to the MySql database and calls a method called GetActiveCustomerData() to get a list of customers to be processed.

To simulate a larger data set, each customer is loaded into the customerList variable 100 times, resulting in a total of 58,400 items in the list.

Then, we loop through the customer data to build a list of customer invoices.

static void Main(string[] args)

{

try

{

var startTime = DateTime.Now;

using (MySqlConnection conn = GetDbConnection())

{

var customerInvoices = new List<Invoice>();

conn.Open();

var customerList = GetActiveCustomerData(conn);

var invoiceReports = new List<Invoice>();

foreach (var customer in customerList)

{

var address = GetAddress(conn, customer);

var invoice = new Invoice(customer, address);

customerInvoices.Add(invoice);

}

ReportMetrics(startTime);

}

}

catch (Exception e)

{

Console.WriteLine("Error: {0}", e.ToString());

}

}

The customer data is returned as a list containing instances of a class called Customer, which has the following structure:

public class Customer

{

public int CustomerId { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public int AddressId { get; set; }

}

The application needs to take the customer data and convert it to the invoice format. The invoice format is stored as a list of instances of the class Invoice, which has the following structure:

public class Invoice

{

public Invoice(Customer customer, string address)

{

CustomerName = String.Format("{0}, {1}", customer.LastName, customer.FirstName);

Address = address;

}

public string CustomerName { get; set; }

public string Address { get; set; }

}

The constructor for the Invoice class requires two parameters: An instance of the Customer class and the address string. The Customer class only has the AddressId, stored in the class’s AddressId parameter. We’ll have to look up the address from the database. We can see that this lookup is happening in the Main method in these lines of code:

foreach (var customer in customerList)

{

var address = GetAddress(conn, customer);

var invoice = new Invoice(customer, address);

customerInvoices.Add(invoice);

}

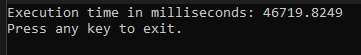

I have added some code that reports the total execution time of the application. It takes 46.7 seconds to run the process:

The list of customers only has 58,400 items in it. 46 seconds seems like a long time for that small of a data set. Let’s run the Visual Studio profiler and see if we can get some insight into what’s happening here.

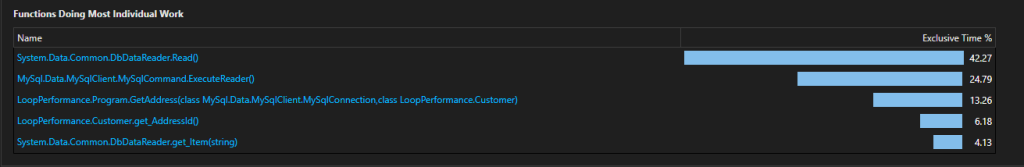

The profile says that 42.27% of the execution time is being spent in the DbDataReader.Read(). If we trace the path though the profile output, we can see the GetAddress() method is being called. Let’s take a closer look at that method:

static String GetAddress(MySqlConnection conn, Customer customer)

{

var address = "";

string query = "SELECT address_id, address FROM address";

MySqlCommand cmd = new MySqlCommand(query, conn);

MySqlDataReader rdr = cmd.ExecuteReader();

while (rdr.Read())

{

if (int.TryParse(rdr["address_id"].ToString(), out int addressId))

{

if (addressId == customer.AddressId)

{

address = rdr["address"].ToString();

}

}

else

{

throw new Exception(String.Format("Address not found for customer id {0}", customer.CustomerId));

}

}

rdr.Close();

return address;

}

Oops! This method is querying the database and looping through every row in the address table until it finds the row that matches the customer and then returning the address.

To an experienced software developer, what’s going on here is going to seem obvious. However, it is surprisingly easy, especially for beginners, to make mistakes like this when the code is more complicated than what is shown in this contrived example.

So, what’s the issue here? Remember that GetAddress() is also called within a loop in our Main method. The loop in the Main method is processing 58,400 items of invoice data. So it’s iterating 58,400 times. The address table has 603 rows in it. That means that the loop in the called method is iterating 603 times for EVERY ITERATION of the foreach loop in the Main method. That means the total iterations for both loops is 35,215,200 times! (58,400 * 603 = 35,215,200)

This is a common problem with nested loops. However, another problem here is that we’re running the query to get the address data every time GetAddress() is called. This means we’re hitting the database 58,400 times, once for each iteration of the outer loop, creating an unnecessary load on the database.

So, how do we solve this problem? One approach is to load the lookup table data into memory. Since the table only has 603 rows in it, and we only need the address in this example, we can load this data into memory without too much impact on memory consumption. Let’s look at an example using this approach.

We’ve renamed GetAddress to GetAddresses and refactored the logic. The function now returns a dictionary loaded with the address ids as the keys and the address string as the values.

Please note: For simplicity, the function shown below isn’t doing any validation on the database values. In a real-world scenario, we might want to make sure we can successfully parse the address_id before assigning it to a value.

static Dictionary<int, string> GetAddresses(MySqlConnection conn)

{

var addresses = new Dictionary<int, string>();

string query = "SELECT address_id, address FROM address";

MySqlCommand cmd = new MySqlCommand(query, conn);

MySqlDataReader rdr = cmd.ExecuteReader();

while (rdr.Read())

{

var addressId = int.Parse(rdr["address_id"].ToString());

if (!addresses.ContainsKey(addressId))

{

addresses.Add(addressId, rdr["address"].ToString());

}

}

rdr.Close();

return addresses;

}

We’ve also refactored the logic in the Main method accordingly. GetAddresses() is only called once, before the customer data is iterated. We’ve also modified the method to get the address string from the addresses dictionary that has now been loaded into memory.

static void Main(string[] args)

{

try

{

var startTime = DateTime.Now;

using (MySqlConnection conn = GetDbConnection())

{

var customerInvoices = new List<Invoice>();

conn.Open();

var addresses = GetAddresses(conn);

var customerList = GetActiveCustomerData(conn);

var invoiceReports = new List<Invoice>();

foreach (var customer in customerList)

{

var address = addresses[customer.AddressId];

var invoice = new Invoice(customer, address);

customerInvoices.Add(invoice);

}

ReportMetrics(startTime);

}

}

catch (Exception e)

{

Console.WriteLine("Error: {0}", e.ToString());

}

}

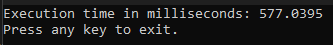

This refactoring reduces the number of loop iterations back down to 58,400. Once for each item in the list of customers. Let’s run the application again and see how performance is looking now:

577 milliseconds! The results are looking much better now.

Summary: By replacing a nested database query inside a loop with a dictionary-based in-memory lookup, we reduced the number of database queries from 58,400 to just 1 and cut execution time from 46.7 seconds to 577 milliseconds.

By loading the data from the database into memory, we reduced the total number of iterations of the loop, as the nested loop is no longer necessary. We also reduced the number of queries we need to execute on the database while iterating through the customer data from 58,400 to 1 as well.

The solution above works great when the data loaded into memory has a small enough footprint to not be problematic. For larger data sets, where loading the data into memory is not practical, alternative solutions may need to be considered. We will look at some of those solutions in upcoming posts.

Nested loops in code like this may seem fine when your data set is small, but they will present problems as your data scales. Especially if database I/O is involved. You don’t need to refactor immediately, but I hope this post gives you a clear path forward when you run into performance problems like this.